Table of Contents

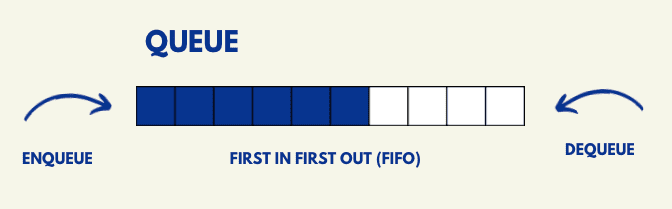

ToggleQueues are one of the most fundamental data structures in computer science. A queue follows the first-in, first-out (FIFO) principle – the item that is added first is the item that is removed first. There are widespread Queue applications in computing systems and in our daily lives.

In this article, we will first give a brief overview of queues and then look into queue applications in computing and queue application in data structure and algorithms. We will then explore some key concepts related to queues – their definition, characteristics, and different types of queues and comparative analysis of queues versus other data structures. So let’s dive in.

Brief Overview of Queues

A queue is a linear data structure that follows the FIFO order. Elements are added from one end called the rear, and removed from the other end called the front. Queues maintain order – the element added first will be processed first.

Queues allow efficient insertion and deletion of elements. Adding an element takes O(1) time, as we simply insert at the rear. Removing an element also takes O(1) time, as we simply remove from the front.

Queues provide a buffer or waiting area for objects. For example, in a processing pipeline, if one stage finishes faster than the next stage, the data waiting to be processed can be held in a queue.

There are many Queue applications in computing – from operating systems and process management to network bandwidth allocation. They allow smooth data handling and transition between different stages of operation.

Queue Applications in Computing

Here are some reasons why queues are an indispensable component in most computer systems. Some Queue Applications in Computing are:

- Ordering: Queues preserve the chronological order of elements added to them. This sequencing is critical in many computing pipelines.

- Synchronization: Queues allow smooth data flow between producers and consumers, avoiding bottlenecks. The producers add data to the rear while consumers remove data from the front.

- Resource Sharing: Queues fairly share resources between data sources – elements are processed in FIFO manner. This prevents starvation and optimizes throughput.

- Traffic Control: Queues handle bursts of data without loss by providing a wait area. Network routers use queues to prevent packet loss when traffic spikes.

- Asynchronous Events: Queues allow events to be handled asynchronously in a non-blocking way by providing a buffer area. For example, in IO handling.

- Workflow: Complex workflows can be modeled as a sequence of queues with elements flowing between different queues at various stages.

In summary, queue applications in computing are for ordering, synchronization, resource sharing, traffic control and asynchronous event handling in computing systems. They enable efficient workflows and prevent data loss.

Application Of Queue In Real-Life

Queues are ubiquitous in real-world systems. Let us go through some common queue applications across different domains:

Order Processing Systems

- At ecommerce websites, orders are placed in a queue and processed sequentially. Sellers can efficiently manage a spike in orders.

- Food delivery platforms like Zomato queue up incoming orders. The delivery partners pick up orders for delivery based on time of order.

- Call centers queue up incoming customer calls and assign to the next available service agent. Calls are answered in the order they arrive.

Ticket Booking Systems

- Online movie and event ticket booking applications use queues to manage ticket requests. Requests are served in order of arrival.

- Ride-sharing apps like Uber queue booking requests during peak times. Nearby cabs are assigned requests sequentially.

Data Buffering

- Network switches use queues to buffer incoming packets if multiple packets arrive at the same time. Packets are then processed in order.

- Operating systems queue file IO requests. The disk driver reads and writes data in the order that requests were made.

- Print queues on operating systems manage print jobs by storing them sequentially before sending to printer.

Task Scheduling

- In CPUs, the scheduler uses a queue to store processes waiting for the processor. Scheduling algorithms like FCFS use queue order.

- Web servers queue incoming HTTP requests and release workers to handle them in FIFO order.

- Batch video rendering software queues frames and dispatches them to GPU cores based on time added to queue.

Call Center Systems

- In call centers, callers are put in a waiting line and routed to the next available agent in queue order.

- Support tickets are queued up and assigned to agents based on factors like priority and time received.

Traffic Management

- Traffic lights use queues to manage waiting vehicles and alter signals based on traffic buildup.

- Toll booths on highways use queues to manage vehicle flow. Vehicles are served on FIFO basis to prevent congestion.

Network Packet Handling

- Routers have input and output queues to handle spikes in network traffic. Packets are processed in FIFO order from these queues.

- Rate limiting queues are used to manage bandwidth usage. Only specified number of packets are forwarded from the queue periodically.

Entertainment and Media

- Music apps queue up songs in a playlist or queue. Songs are played sequentially in the order they were added.

- Video streaming platforms use prefetch queues to buffer content before playing. This compensates for network lags.

Queue Application In Data Structure And Algorithms

Now that we have seen some common queue applications, let’s discuss some advanced Queue application in data structure and Algorithms for problems like graph traversal and memory management.

Here are some key queue application in data structure and algorithms:

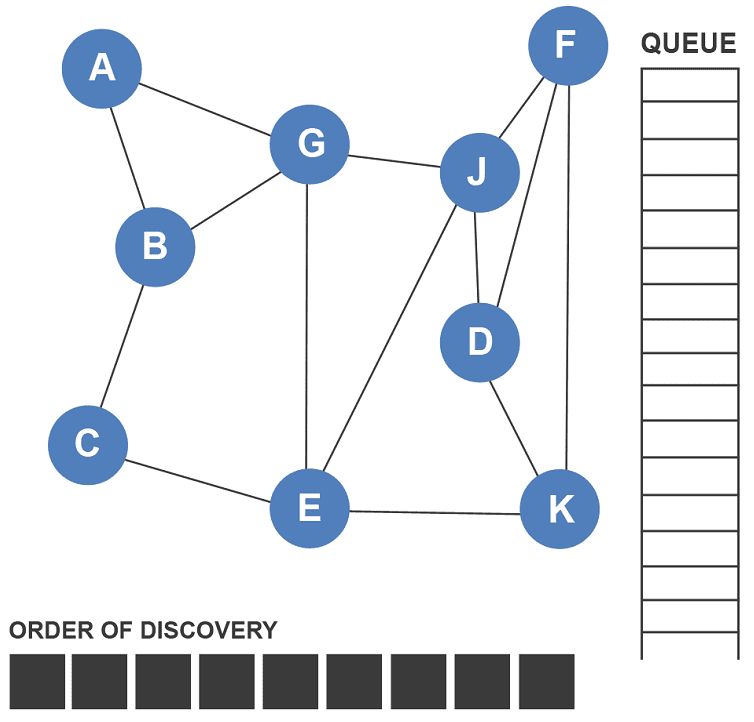

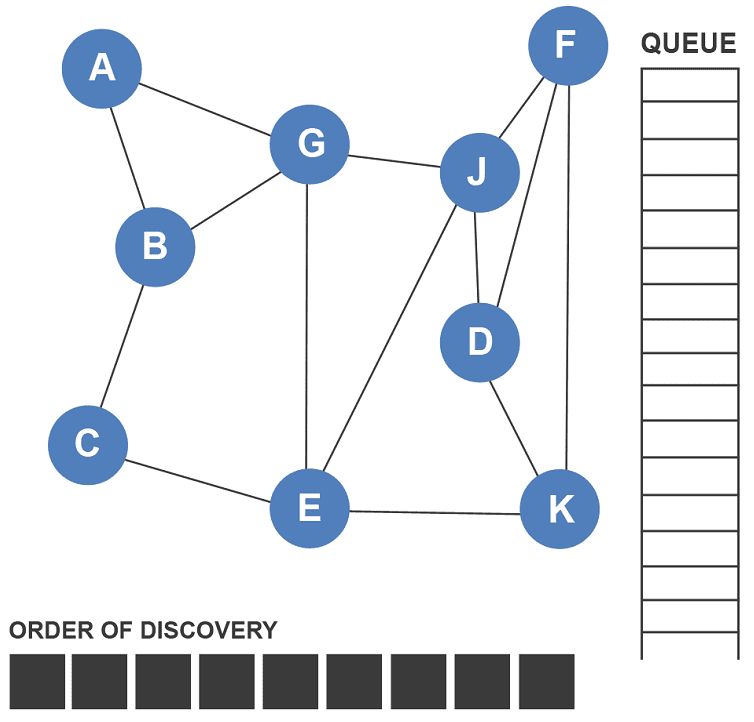

Breadth First Search in Graphs

Breadth first search approaches graph traversal level by level from a source vertex. A queue stores vertices of each level, adds vertices of next level at back and removes vertices of current level from front to process them.

Here is the pseudo code for BFS graph traversal using a queue:

- Create a visited array of size V (number of vertices) and mark all vertices as not visited

- Create a queue Q

- Add source vertex s to Q and mark s as visited

- While Q is not empty:

- Remove the front vertex u from Q

- Print u

- For each neighbor v of u:

- If v is not visited:

- Mark v as visited

- Enqueue v to Q

The pseudo code steps are:

- Initialise visited array and queue

- Add starting vertex to queue and mark visited

- Loop while queue is not empty

- Dequeue front vertex

- Print dequeued vertex

- Enqueue and mark unvisited neighbors

- Repeat until queue is empty

Memory Management and Garbage Collection

Mark-and-sweep garbage collectors use a queue to store objects to be scanned for references. All referenced objects are “marked” and unreferenced ones are deleted after scanning entire queue.

Load Balancing in Distributed Systems

Load balancers use queues to distribute incoming client requests across backend servers. Requests are enqueued and dequeued in sequence and distributed round-robin across servers.

Blocking Queue

Used in thread pools and producer-consumer problems. Blocks threads trying to dequeue from empty queue or enqueue into full queue.

Job Scheduling

Operating systems use queues like ready queue to schedule jobs and processes using algorithms like FCFS, SJF etc.

Dynamic Programming

Some DP solutions use queues. For example, queues in BFS DP over trees and grids for shortest paths.

Data Buffering

Queues used to buffer data from fast producers to slower consumers. For example, queueing layers between networks.

Congestion Control

Queues help absorb temporary bursts in traffic and prevent packet loss. Used in rate limiting, routers etc.

So in summary, queues have diverse applications in search, synchronization, workflows, memory management, scheduling, data transport and load distribution in data structures and algorithms.

Fundamental Concepts

Now that we have seen an queue applications, let us dive deeper into some fundamental concepts.

Definition and Characteristics of a Queue

A queue is a linear data structure that stores elements in a sequence and allows addition at one end (rear) and removal at the other end (front) in a FIFO (First-In First-Out) order.

The key characteristics of queues are:

- Ordered: Elements are stored and accessed in a specific order (FIFO).

- Sequential: Elements are added at the rear and removed from the front. New elements are always added at the back.

- Singular Access: At any point, we can only access the front element for removal and the last element for addition.

- Bounded: A queue is typically bounded to a maximum capacity for practical implementation purposes.

- Dynamic: The number of elements in a queue changes dynamically as elements are added and removed over time.

Queues provide FIFO access, ordered insertion and sequential access to elements in an efficient manner. These characteristics make them useful for a variety of use cases.

Different Types of Queues

There are different types of queue data structures used in practice:

Linear Queue

In a linear queue, elements are stored in a linear sequence exactly in the order of insertion. Linear queues follow FIFO fully and their implementation is straightforward using arrays or linked lists.

Circular Queue

A circular queue is also a linear FIFO queue but it reuses empty slots in a circular fashion. This makes it memory-efficient as we do not need to move elements continuously like in a linear queue.

Priority Queue

In a priority queue, every element has a certain priority. Elements are removed based on highest priority rather than FIFO. Priority queues are used in schedulers and Huffman coding.

Deque (Double Ended Queue)

Deques allow insertion and removal from both front and back. However, ordered sequence is still maintained – elements are removed in the same order as insertion. Deques provide flexibility of accessing both ends.

There are also other variations like LIFO (Last-In First-Out) queues that reverse the order of removal. But FIFO is the most common queuing order used in real systems.

Comparative Analysis

Let us now compare queues with other linear data structures like stacks and discuss their relative advantages.

Queues vs Other Data Structures

Here is a comparison of queues with other linear data structures in a table format:

| Feature | Queue | Stack | List |

|---|---|---|---|

| Order | FIFO | LIFO | Unordered |

| Main Operations | Enqueue, Dequeue | Push, Pop | Insert, Delete |

| Placement of new element | Rear | Top | Anywhere |

| Removal of element | Front | Top | Anywhere |

| Use Cases | Workflow sequencing, message passing | Function calls, expression evaluation | Generic data storage and access |

| Time Complexity | O(1) for enqueue and dequeue | O(1) for push and pop | O(n) for worst-case insert and delete |

| Locality | Better cache usage due to sequential access | Poor cache usage due to LIFO | Moderate locality |

In summary:

- Queues follow FIFO while stacks follow LIFO for element ordering. Lists are unordered.

- Main operations differ – queues use enqueue and dequeue while stacks use push and pop. Lists allow generic insert and delete.

- New elements are added at rear in queues, top of stack, and anywhere in lists.

- Element removal is from front of queue, top of stack and any position in lists.

- Use cases differ – queues for workflow sequencing, stacks for function calls, lists for generic access.

- Queues have optimal O(1) time for enqueue/dequeue while lists have O(n) for worst-case insert/delete.

- Queues have better data locality due to sequential access from front and rear.

Implementation and Algorithms Of Queue

Let’s look at how queues are actually implemented in code and the algorithms for basic queue operations.

Queue Basic Operations: Enqueue, Dequeue, Peek

Queues support three fundamental operations:

Enqueue – Add an element to the rear of the queue.

Pseudocode:

// Enqueue enqueue(q, x) q.rear <- q.rear + 1 q[q.rear] <- x

Time Complexity: O(1)

Dequeue – Remove an element from the front of the queue.

Pseudocode:

// Dequeue dequeue(q) x <- q[q.front] q.front <- q.front + 1 return x

Time Complexity: O(1)

Peek – Get the element at the front without removing it.

Pseudocode:

//Peek peek(q) return q[q.front]

Time Complexity: O(1)

These basic operations allow us to add and access elements in an efficient FIFO manner. Enqueue and dequeue take constant time which makes queues suitable for many applications.

Implementing Queues using Arrays and Linked Lists

Queues can be implemented using both arrays and linked lists:

Array Implementation Of Queue

Maintain front and rear indices, increment rear to enqueue, increment front to dequeue. Use modular arithmetic to reuse slots.

Here is an example Java implementation of a queue using arrays:

// Queue Applications: Array Implementation Of Queue

//Queue class

public class Queue {

// Properties

private int[] arr; // array to store queue elements

private int front; // front points to front element in queue

private int rear; // rear points to last element in queue

private int capacity; // maximum capacity of the queue

// Constructor to initialize queue

public Queue(int size) {

arr = new int[size];

capacity = size;

front = 0;

rear = -1;

}

// Utility function to remove front element

public void dequeue() {

// check for queue underflow

if (isEmpty()) {

System.out.println("UnderFlow");

System.exit(1);

}

front++;

}

// Utility function to add an item to the queue

public void enqueue(int item) {

// check for queue overflow

if (isFull()) {

System.out.println("OverFlow");

System.exit(1);

}

rear++;

arr[rear] = item;

}

// Returns true if the queue is empty

public boolean isEmpty() {

return front > rear;

}

// Returns true if the queue is full

public boolean isFull() {

return capacity == rear + 1;

}

public static void main(String[] args) {

// create a queue of capacity 5

Queue q = new Queue(5);

q.enqueue(1);

q.enqueue(2);

q.enqueue(3);

System.out.println("Front item is: " + q.arr[q.front]);

q.dequeue();

System.out.println("Front item is: " + q.arr[q.front]);

q.enqueue(4);

while(!q.isEmpty()) {

System.out.print(q.arr[q.front] + " ");

q.dequeue();

}

}

}This implements the queue using a fixed size array. The front and rear indices track the start and end of the queue. Enqueue involves inserting at rear index and dequeue involves removing from front. It also checks for overflow and underflow conditions.

Linked List Implementation Of Queue

Maintain front and rear pointers, add new nodes at rear, remove from front via front pointer.

Here is an implementation of a queue using linked list in Java:

// Queue Applications: Linked List Implementation Of Queue

// QueueNode class

class QueueNode {

int data;

QueueNode next;

public QueueNode(int data) {

this.data = data;

this.next = null;

}

}

// Queue class

public class Queue {

// Front and rear pointers

private QueueNode front, rear;

public Queue() {

this.front = this.rear = null;

}

// Method to add element to the queue

public void enqueue(int data) {

// Create new node

QueueNode newNode = new QueueNode(data);

// If queue is empty, initialize front and rear pointer

if(this.rear == null) {

this.front = this.rear = newNode;

return;

}

// Add newNode at the end and update rear pointer

this.rear.next = newNode;

this.rear = newNode;

}

// Method to remove element from the queue

public void dequeue() {

// If queue is empty

if (this.front == null)

return;

// Store previous front and move front one node ahead

QueueNode temp = this.front;

this.front = this.front.next;

// If front becomes null, set rear also as null

if (this.front == null)

this.rear = null;

}

public static void main(String args[]) {

Queue q = new Queue();

q.enqueue(10);

q.enqueue(20);

q.dequeue();

q.enqueue(30);

q.enqueue(40);

q.dequeue();

System.out.println("Queue Front : " + q.front.data);

System.out.println("Queue Rear : " + q.rear.data);

}

}This implementation maintains front and rear pointers to keep track of the queue. New nodes are added at rear and removals happen from front.

Arrays allow O(1) access while linked lists require O(n) time to reach a position. But linked lists are more dynamic and do not need reindexing or resizing arrays. The optimal implementation depends on the use case and tradeoffs between access time and flexibility.

Future Trends and Innovations In Queue

Let’s now look at how queue implementations and queue applications can evolve in the future with new innovations.

Evolution of Queue-based Systems

- Prioritized queues can improve QoS for critical tasks and emergency requests by scheduling them first. This can enhance workflows.

- Distributed queues can handle huge loads and faults by spreading a logical queue across servers. This improves scalability.

- In-memory queues offer lower latency by caching queue data in fast RAM instead of disk. Dequeuing latency benefits the most.

- Lock-free queues avoid locking overheads of thread-safe queues. Multiple threads can access safely using atomic operations.

Potential Breakthroughs in Queue Applications

Some of the potential breakthroughs in Queue Applications are:

- Real-time image and video processing by buffering frames into intelligent processing queues. This unlocks new interactive apps.

- Machine learning to optimize queue behaviour for cost, latency and throughput tradeoffs. This allows automated queue tuning.

- Leveraging queues for distributed ledger transactions to order operations cryptographically and achieve consensus.

- Low-latency edge computing using queues to batch and sequence tasks across endpoints. Reduces cloud dependency.

- Game theory and queueing theory to model human queues and minimize wait times. Applicable to traffic, airports etc.

Conclusion

Let us now recap the key points about queues and queue applications.

Recap of Key Points

- Queues are FIFO data structures that provide sequenced access to elements.

- Queues enable order, synchronization and smooth workflows in computing.

- Applications range from CPU scheduling to data buffers, networks and multimedia.

- Queues can be implemented efficiently using arrays or linked lists.

- Future innovations include intelligent queues for real-time stream processing.

Encouraging Further Exploration

Queues are a fundamental concept with expansive use cases. There is a lot more to explore:

- Implementing circular queues, priority queues, double-ended queues.

- Optimized algorithms like skew heaps for priority queues.

- Probabilistic analysis of queue behavior using queuing theory.

- Hardware optimizations for low-latency, high-throughput queue architectures.

We encourage readers to further explore these advanced aspects of queue theory, algorithms and implementations.

Try out our free resume checker service where our Industry Experts will help you by providing resume score based on the key criteria that recruiters and hiring managers are looking for.

References and Further Reading

- Data Structures and Algorithms in Java, Goodrich et al. Wiley.

- Introduction to Algorithms, Cormen et al. MIT Press.

- Operating Systems: Three Easy Pieces, Remzi and Arpaci-Dusseau. Arpaci-Dusseau Books.

- Queueing Theory, Kleinrock. Wiley.

- Designing Data-Intensive Applications, Kleppmann. O’Reilly.

FAQ Regrading Queue Applications

How are queues used in operating systems?

In OS, queues are used for scheduling processes, buffering I/O requests, spooling print jobs, managing memory allocation, synchronizing threads, and other order-sensitive tasks.

When would a queue be preferred over a stack?

Queues are preferred when order of processing matters, like first-come-first-serve. Stacks suit use cases where last-in-first-out order like function calls is needed.

What are priority queues used for?

Priority queues are used in systems that need to process higher priority elements first, like emergency rooms, network traffic control, airline boarding etc.

How do queues help with load balancing?

Queues evenly distribute incoming requests across servers. Requests are buffered in a queue and assigned to servers in round-robin order to spread load.

What queue algorithms are used in OS process scheduling?

FCFS, SJF, Priority, Round Robin are common queue-based scheduling algorithms used in OS. The OS scheduler maintains queues of processes based on these policies.

How many queues are required to implement a stack?

To implement a stack using queues, you typically need two queues.The basic idea is to use one of the queues as the primary data storage for the stack, while the other queue is used for temporary storage during stack operations.