Table of Contents

ToggleIn this article we will look into Load Balancer In System Design and how they are used. We will take a closer look at how load balancers are used to enhance system performance. So’ let’s get started.

What is a Load Balancer In System Design?

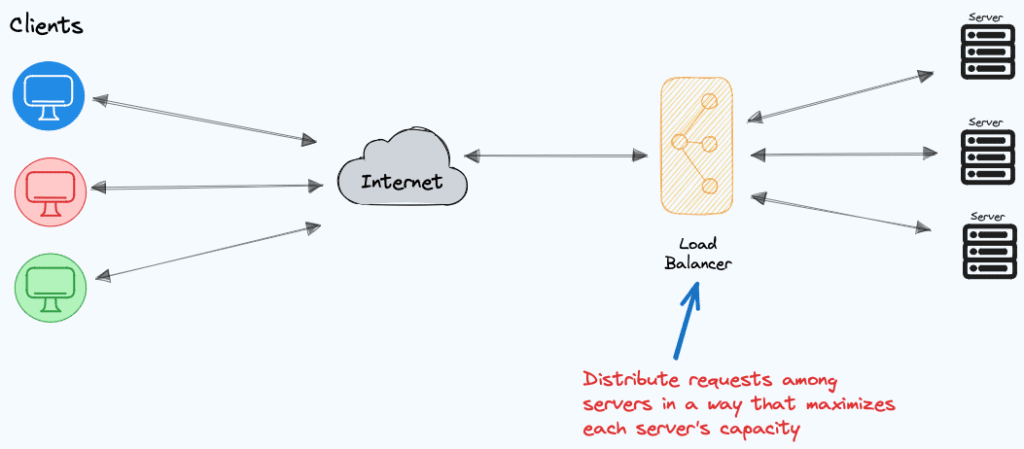

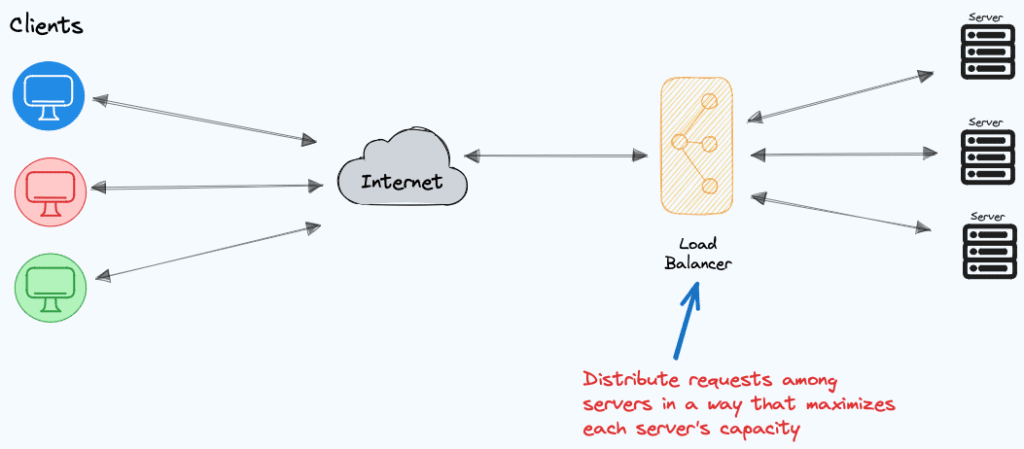

A load balancer in system design is a component used for distributing incoming network traffic and requests evenly across a group of backends servers (like web servers or application servers). It helps avoid overloading any single resource by load spreading requests parallel across multiple servers.

What is a load balancer used for?

- Increased Capacity:

Load balancers distribute incoming traffic across multiple backend servers, effectively increasing the overall capacity of the system. By intelligently managing requests, a load balancer ensures that no single server becomes overwhelmed, allowing the system to handle a higher volume of concurrent users and requests. - Availability:

Load balancers enhance system availability by introducing redundancy. In the event that one server fails or experiences issues, the load balancer redirects traffic to healthy servers, ensuring uninterrupted service. This high availability architecture minimizes downtime, enhances reliability, and provides a seamless experience for users, even in the face of server failures. - Improved Scalability and Flexibility:

Load balancers contribute to system scalability and flexibility by making it easy to scale resources up or down. When the demand for services increases, additional backend servers can be seamlessly added to the pool. Conversely, during periods of lower demand, servers can be removed without disrupting ongoing operations. - Performance:

Load balancers improve performance by optimizing the distribution of requests and, in some cases, caching frequently accessed content. Clients experience lower latency as the load balancer directs them to the server that can respond most quickly to their request.

How Load Balancer works?

A load balancer sits between the client and the group of back-end servers accepting incoming requests on the public IP address. Based on algorithm (like round-robin) and backend server health/metrics, it selects an appropriate server to forward each request to. Servers responses go through the load balancer back to the appropriate client.

For instance, if a load balancer handles 1000 incoming requests, it might distribute 500 to App Server 1 and 500 to App Server 2, preventing any single server from being overwhelmed and ensuring balanced utilization of resources. This not only enhances the system’s capacity to handle more requests but also significantly improves overall performance by preventing bottlenecks.

Additionally, the load balancer conducts periodic health checks on the backend servers, ensuring that requests are only forwarded to servers that are available and in good health. This proactive monitoring adds a layer of reliability, minimising downtime by redirecting traffic away from servers experiencing issues.

What are the types of load balancing algorithm?

Load balancers use various algorithms to intelligently distribute incoming traffic among backend servers. Common load balancing algorithms include:

Round Robin

In the Round Robin algorithm, the load balancer cycles through the list of servers sequentially, forwarding each new request to the next server in line. This ensures an even distribution of requests among the servers, preventing any single server from becoming overloaded. Round Robin is a simple and effective way to balance the load across multiple servers.

Least Connections

The Least Connections algorithm directs a new request to the server with the fewest active connections at the time. This approach optimizes resource usage by distributing the load based on the current server capacities.

It is particularly useful when servers have varying capabilities or workloads, ensuring that requests are sent to the server best equipped to handle them efficiently.

IP Hash

IP Hashing involves hashing the client’s IP address and consistently mapping requests to the same backend server based on the hash result. This method ensures that a specific client’s requests are consistently directed to a particular server.

IP Hashing is advantageous for scenarios where maintaining session persistence or state is crucial, as it ensures continuity in handling a user’s requests by routing them to the same server each time.

Weighted Round Robin

Weighted Round Robin introduces a customizable weight assignment to each server based on its capacity or performance capabilities. Servers with higher weights receive a proportionally larger share of incoming requests.

This flexibility allows system administrators to fine-tune the distribution, ensuring that more powerful servers handle a greater portion of the workload, thus optimizing overall system performance.

Least Response Time

The Least Response Time algorithm directs new requests to the server with the shortest response time, measured by the time it takes to process and respond to a request.

This real-time assessment ensures that requests are routed to the server that can deliver the quickest response, thereby enhancing the overall user experience. This algorithm is particularly beneficial in scenarios where minimizing latency is a critical factor.

Checkout the below post to know more about Different Ways Of Routing Requests Through Load Balancer.

What are Types of Load Balancer?

Load Balancers can be divided into various types based on different criteria

Based on Configurations

There are mainly three types of load balancers based on configurations:

- Hardware Load Balancers

- Software Load Balancers

- Cloud-Based Load Balancers

Hardware Load Balancers

Hardware Load Balancers are specialized physical devices designed exclusively for managing and distributing network traffic across multiple servers. These dedicated appliances provide high-performance load balancing capabilities, leveraging custom-designed hardware components.

Some examples of Hardware Load Balancers in the market include F5 BIG-IP and Citrix ADC. These devices are ideal for handling large-scale applications and critical workloads, offering features like SSL termination, advanced traffic management, and high availability.

Software Load Balancers

Software Load Balancers leverage software applications and protocols to distribute incoming traffic among servers. Unlike their hardware counterparts, these solutions are implemented on general-purpose servers, making them more flexible and cost-effective.

NGINX and HAProxy are popular examples of software load balancers. They are widely used in virtualized environments, containerized applications, and scenarios where scalability and adaptability are paramount.

Cloud-Based Load Balancers

Cloud-Based Load Balancers are offered as managed services by cloud providers, providing scalability and ease of configuration. Examples include Amazon Elastic Load Balancer (ELB), Google Cloud Load Balancing, and Azure Load Balancer.

These services enable users to distribute traffic across multiple servers within the cloud infrastructure, automatically scaling to accommodate varying workloads. Cloud-based load balancers offer the advantage of seamless integration with other cloud services, providing a comprehensive solution for applications deployed in cloud environments.

Based on Functions

There are mainly two types of load balancers based on configurations:

- L4 Load Balancer

- L7 Load Balancer

L4 Load Balancer

A Layer 4 (L4) Load Balancer operates at the network transport layer, focusing on analyzing TCP/UDP headers to efficiently distribute incoming network traffic. This load balancing solution makes decisions based on factors such as source and destination IP addresses and ports.

By working at a lower level in the OSI model, L4 load balancers are well-suited for handling large-scale network traffic and ensuring optimal resource utilization.

Examples of L4 load balancers include products from companies like Kemp Technologies and Barracuda Networks.

L7 Load Balancer

In contrast, a Layer 7 (L7) Load Balancer operates at the application layer, providing a more granular approach to traffic management. This type of load balancer understands and interprets protocols at the application layer, such as HTTP, allowing it to make routing decisions based on information found in HTTP headers and server responses. L7 load balancers are particularly effective in environments where application-specific considerations, such as content-based routing and session persistence, are crucial. NGINX and F5 BIG-IP are examples of popular L7 load balancing solutions, offering advanced application-aware features.

Stateless vs. Stateful Load Balancing

Stateless load balancing treats each request independently without maintaining any knowledge of previous interactions, making it suitable for stateless protocols. On the other hand, stateful load balancing retains information about the connection state, allowing for features like session persistence, but it introduces complexities and potential scalability challenges.

| Feature | Stateless Load Balancing | Stateful Load Balancing |

|---|---|---|

| Connection Handling | Treats each request independently. | Maintains awareness of the connection state. |

| Persistence | No awareness of the client’s previous requests. | Maintains session information for persistence. |

| Scalability | Typically more scalable due to simplicity. | May have limitations due to state awareness. |

| Fault Tolerance | Resilient to server failures as each request is independent. | Requires mechanisms to synchronize state information, impacting fault tolerance. |

| Complexity | Simpler implementation with lower overhead. | More complex due to the need for state management. |

| Use Cases | Suitable for stateless protocols and applications. | Preferred for applications requiring session persistence and state awareness. |

| Examples | Round Robin, Least Connections. | Source IP Hashing, Least Response Time. |

FAQ

Which layer is load balancer?

Load balancers operate at both Layer 4 (transport layer) and Layer 7 (application layer) of the OSI model. Layer 4 load balancers analyze TCP/UDP headers, while Layer 7 load balancers work at the application layer, understanding protocols like HTTP for more granular traffic management.

What is the difference between a hardware load balancer and a software load balancer?

Hardware load balancers are dedicated physical appliances optimized for performance, while software load balancers leverage software applications and protocols, offering flexibility and cost-effectiveness. The choice depends on specific scalability and resource utilization requirements.

How does a Layer 4 load balancer differ from a Layer 7 load balancer in terms of functionality?

Layer 4 load balancers operate at the transport layer, analyzing TCP/UDP headers for traffic distribution, while Layer 7 load balancers work at the application layer, understanding protocols like HTTP for more precise routing decisions based on application-specific information. The selection depends on the desired level of granularity in traffic management.