Table of Contents

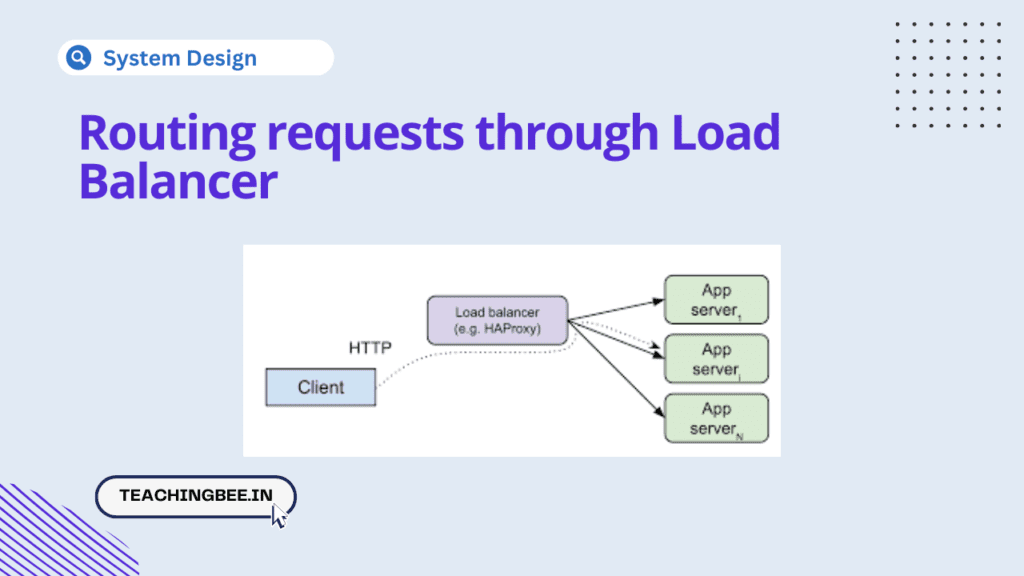

ToggleLike a high-tech traffic controller, a load balancer oversees and directs incoming requests to maintain optimal performance. To intelligently route traffic, it utilizes a variety of algorithms that each take a unique approach to mapping requests to backend servers. While round-robin naively spreads requests equally, more advanced algorithms like least connections and weighted round-robin consider additional factors when making routing decisions. Other methods like IP hash ensure consistency, sending requests from a given IP address to the same server every time. In this post, we will explore some of the most common routing algorithms used in modern load balancers and the advantages of each. Whether simple or sophisticated, these algorithms work behind the scenes to keep your applications running smoothly no matter the traffic.

In this article we will explore different ways of routing requests through Load Balancer, namely

- Round Robin

- Least Connections

- IP Hash

- Weighted Round Robin

- Least Response Time

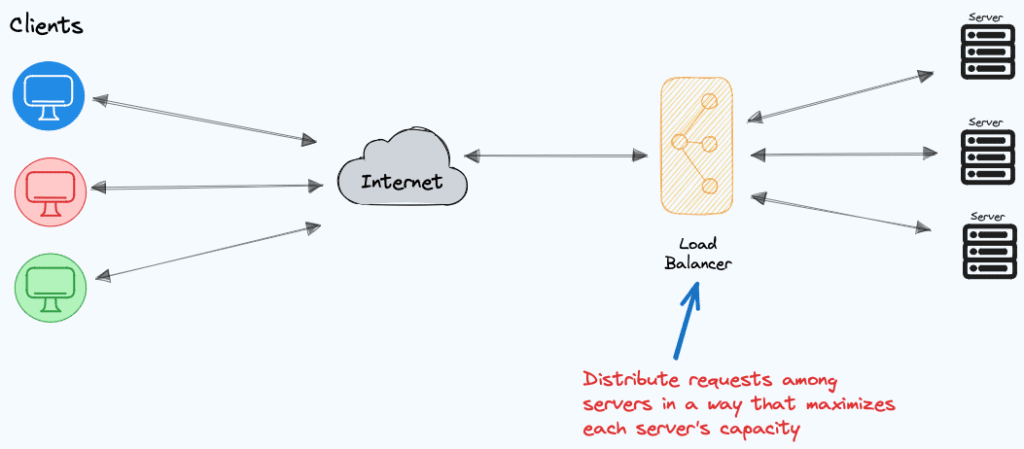

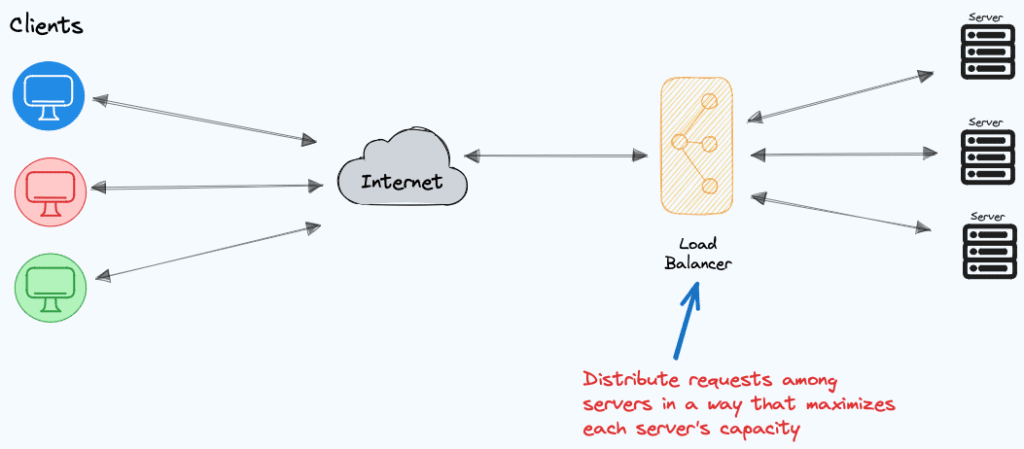

What are Load Balancers?

Load balancer in system design is a crucial component tasked with distributing incoming network traffic and requests uniformly among a group of backend servers, such as web servers or application servers. By doing so, it prevents the overload of any single resource, ensuring that requests are efficiently spread across multiple servers in parallel. This leads to enhanced system performance and resource utilisation.

To know more about load balancer checkout below post on Load Balancer in System Design.

Different Ways Of Routing Requests Through Load Balancer

Load balancers use various algorithms to determine where to route incoming requests. Common algorithms include round-robin, least connections, weighted round-robin, and IP hash. These algorithms consider factors such as server load, response time, and server health to make informed routing decisions.

Round Robin

The Round Robin load balancing algorithm distributes incoming requests in a sequential, circular manner among the available servers. It follows a straightforward rotation, ensuring that each server receives a fair share of the traffic.

Example: Consider three servers labeled A, B, and C. When a new request comes in, the Round Robin algorithm sends it to the next server in line. If we have a sequence of requests, the distribution would look like this:

- Request 1 goes to Server A.

- Request 2 goes to Server B.

- Request 3 goes to Server C.

- Request 4 goes back to Server A.

- Request 5 goes to Server B.

This cycle repeats, creating an even distribution of requests among the servers. Round Robin is simple, easy to implement, and ensures that each server gets an equal opportunity to handle incoming requests. However, it may not consider server load or capacity, and as a result, some servers could be under-utilized or overloaded depending on their capabilities.

Least Connections

The Least Connections load balancing algorithm directs new requests to the server with the fewest active connections at the moment. This approach aims to optimize resource usage by distributing the load based on the current capacities of the servers.

Example:

Let’s consider three servers – A, B, and C – and the current number of active connections on each:

- Server A: 5 active connections

- Server B: 3 active connections

- Server C: 7 active connections

When a new request arrives, the Least Connections algorithm would route it to Server B since it currently has the fewest active connections. This dynamic approach ensures that the load is distributed based on the real-time utilization of each server, preventing any single server from becoming overloaded.

While Least Connections is effective in balancing the load based on the actual server load, it requires continuous monitoring and adjustment to respond to changing conditions and server capacities.

IP Hash

The IP Hash load balancing algorithm uses a hash function on the client’s IP address to consistently map requests from the same client to the same backend server. This ensures session persistence and maintains state for clients across multiple requests.

Let’s consider a simple example using a basic hash function. In this case, let’s use a modulo operation with the number of servers to distribute the requests:

Example: Assume we have three servers – A, B, and C – and a client with the IP address 192.168.1.1.

- Convert IP to Numeric Value:

- Convert the client’s IP address to a numeric value. For simplicity, let’s use the sum of the ASCII values of each digit: 1 + 9 + 2 + 1 + 6 + 8 + 1 + 1 = 29.

- Apply Hash Function:

- Use a basic modulo operation with the number of servers (3 in this case): 29 % 3 = 2.

- Determine Server Assignment:

- The result of the modulo operation (2) indicates that the request should be directed to the server at index 2, which is Server C.

Therefore, based on this simplistic example, the client with IP 192.168.1.1 would be consistently directed to Server C for all subsequent requests, maintaining session persistence. In real-world scenarios, more sophisticated hash functions are employed to achieve a more even distribution and better load balancing.

Weighted Round Robin

The Weighted Round Robin load balancing algorithm assigns weights to each server based on its capacity. Requests are then distributed in a circular sequence, with servers receiving a number of requests proportional to their assigned weights.

Example: Consider three servers – A, B, and C – with respective weights:

- Server A: Weight 2

- Server B: Weight 1

- Server C: Weight 1

Now, let’s distribute five requests in a weighted round-robin manner:

- Request 1 goes to Server A (Weight 2).

- Request 2 goes to Server B (Weight 1).

- Request 3 goes to Server A (Weight 2).

- Request 4 goes to Server C (Weight 1).

- Request 5 goes to Server A (Weight 2).

This cycle repeats, and the distribution is based on the assigned weights. Servers with higher weights receive a proportionally larger share of the requests, allowing for a customized load balancing approach that considers the capacity of each server. Weighted Round Robin is particularly useful when servers have varying capabilities or workloads.

Least Response Time

The Least Response Time load balancing algorithm routes requests to the server with the shortest response time, aiming to optimize user experience by minimizing latency.

Example: Let’s consider three servers – A, B, and C – with respective response times:

- Server A: 10 milliseconds

- Server B: 15 milliseconds

- Server C: 12 milliseconds

When a new request arrives, the Least Response Time algorithm selects the server with the shortest response time:

- The next request goes to Server A (10 milliseconds).

- Following that, the subsequent request goes to Server C (12 milliseconds).

- If another request comes in, it would again be directed to Server A (10 milliseconds).

This dynamic approach ensures that requests are consistently routed to the server providing the quickest response, improving overall user experience. However, it requires continuous monitoring of response times and may be sensitive to fluctuations in server performance.

Benefits Of Routing Requests Through Load Balancer

There are various of Routing Requests Through Load Balancer

- Optimized Performance: Load balancers evenly distribute requests, enhancing system performance and reducing response times.

- Efficient Scalability: Facilitates easy scaling by spreading traffic across servers, ensuring effective resource utilization.

- High Availability: Enhances system availability by redirecting traffic to healthy servers, ensuring uninterrupted service.

- Session Persistence: Maintains continuity in user sessions through features like IP Hashing, supporting a seamless user experience.

Drawbacks Of Routing Requests Through Load Balancer

- Single Point of Failure:A central load balancer can become a single point of failure, impacting the entire system if it malfunctions.

- Complexity and Configuration Overhead:Implementing and managing load balancers can introduce complexity and require careful configuration, especially in large-scale environments.

- Potential Bottlenecks:In certain scenarios, load balancers themselves can become bottlenecks, especially if not properly sized or configured.

- Latency Introductions:The introduction of additional network hops through load balancing can contribute to increased latency in some cases.

FAQ

What criteria does a load balancer use to decide the destination for incoming requests?

Load balancers make routing decisions based on algorithms such as Round Robin, Least Connections, or IP Hashing, considering factors like server load, response times, or client persistence.

How do load balancers contribute to system resilience in the event of server failures?

Load balancers enhance system resilience by redirecting traffic to healthy servers, ensuring uninterrupted service and minimizing the impact of individual server failures.

What role does session persistence play in load balancing, and how is it achieved?

Session persistence in load balancing, often achieved through techniques like IP Hashing, ensures that requests from the same client consistently go to the same server, maintaining continuity in user sessions for a seamless experience.