Table of Contents

ToggleIn this article we will look into Latency In System Design, we will see how is latency introduced into the systems and what are various ways to reduce the latency in system design.So, Let’s get started.

What is Latency In System Design?

Latency in system design refers to the time delay between the input into a system and the desired outcome. It measures the time taken to process a given transaction or request in a system.

For example:

When a user searches for a product on an ecommerce website, there is a time delay between clicking search and receiving results. This latency includes the browser sending request time, application servers processing search queries and fetching data from databases, and constructing/sending the response page back to be rendered. Overall latency negatively impacts user experience on the website.

How does Latency Work?

Latency measures the responsiveness of a system. It is the time interval between stimulation and response in a system.When a request is sent to a system, it takes some time to process it and provide the output. This processing time is known as the latency.

For example, when you click on a website link, there is some time delay before the webpage loads. This is the latency – the time taken by the request to traverse the internet and the web server responding with the webpage HTML. A lower latency indicates faster loading webpage.

Why latency is important in System Design?

- User Experience: Lower latency results in faster response times and improved user experience. High latency leads to laggy, unusable applications.

- Business Metrics: Latency impacts key business metrics like conversion rates, customer satisfaction scores and revenue.

- Scalability: Systems designed for low latency tend to scale better for higher loads and distributed deployments.

- Fault Tolerance: Low latency architectures often have improved resilience by preventing widespread failures through isolation.

What causes Latency In System?

Some common causes of latency include:

Transmission Mediums

Different transmission mediums like fiber optic cables, copper wires, radio waves all propagate signals at finite speeds. This causes an inherent propagation delay contributing to overall latency.

Wireless mediums generally have higher latency compared to wired fiber/copper lines due to speed of electromagnetic wave propagation being slower than electrons over wire.Also, signal attenuation effects in mediums cause delays associated with retransmissions and error correction mechanisms before correct data is received.

Propagation Delays

The physical distance signals have to travel from source to destination itself contributes latency proportional to time taken for one-way travel. More geographical spread leads to higher propagation delay.

Routers/Switches Processing

At each network router and switch in the path, time is consumed in buffering packets, determining routing logic and queueing delays waiting for transmission that add cumulatively to end-to-end latency.

Server Processing Delay

Time taken by application servers to process requests based on their execution logic adds to latency – disk I/O, data access, computation etc all take some finite time.

Storage Access Delay

Retrieval times required to load data from networking or I/O interfaces to memory or access secondary storage with moving disk heads causes storage access latency.

Optimizing the network paths and each of these physical or logical components helps lower system latency.

Types of Latency in System Design

Here are the types of latency in system design explained further:

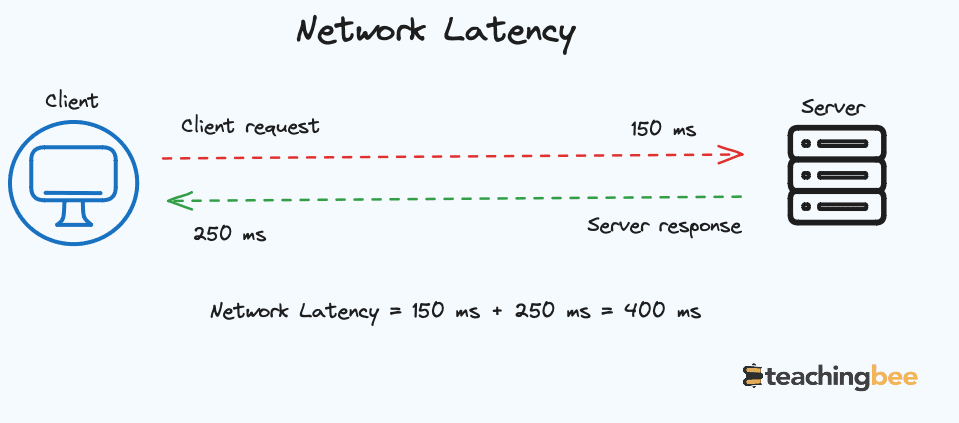

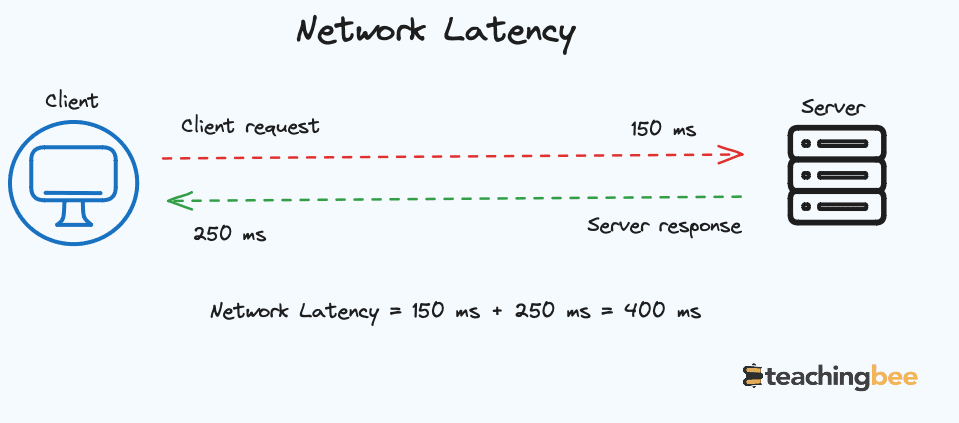

Network Latency

Network latency describes the time it takes for data packets to transfer from source to destination through various network devices. This includes propagation delay, which is the time taken by the packet to travel physically through wires or fiber optics between nodes due to the finite speed of electrons or photons.

At each router, a transmission delay occurs as the packet must be fully received before the router can send it to the next hop. Inside routers, processing delay occurs for computing routes, reading headers, performing error checks etc. Lastly, when there is an excess inflow of packets, they wait in router buffers and this waiting time is known as queueing delay.

Application Latency

In contrast to pure network latency, application latency incorporates the delays experienced by consumers of an application’s observable response. At the client end, users experience bad performance due to slow device processing or browser rendering times.

But on the server side, “application code” introduces important delays, from initial request/response cycle, to computational or business logic processing based on system architecture, and the time to read or write data from storage or databases based on the I/O access speed.

Geographic Latency

For large scale distributed systems serving customers across continents, the sheer physical distance packets have to traverse contributes extra latency – for example the time for transmission of data between data centers in Asia-Pacific and Latin America. The “last mile” connectivity also plays a role, in the form of latency introduced between local ISPs and backbone networks to the edge computing sites.

Browser Latency

The browser software also bears responsibility for latency related to rendering Web graphical interfaces, parsing HTML, loading stylesheets, executing JavaScript code and more client-side operations vital for usability.

Data Store Latency

Latency occurs when interacting with persistent data stores owing to physical constraints like disk access times, memory load times, database query processing times etc. This includes seek time for disk heads, data transfer time, indexing lookup time for queries and caching effects.

How to measure latency In System Design?

There are a few key ways in which latency is measured in system design:

- Ping Tests: Round-trip time for a echo request/response packet gives latency between two connected devices/systems.

- Instrumentation: Code instruments like timers, logs and traces to measure latency for service calls, database transactions etc at code level.

- Analytic Tools: Monitoring and observability tools to track system metrics like application performance monitoring tools.

- User Testing: Real user tests to measure responsiveness from a user perspective provides user-experience latency.

- MMTR: MMTR is a network diagnostic tool that combines functionality of both ping and traceroute. It sends multiple back-to-back ICMP echo requests from source to destination to measure metrics like loss, latency, jitter on a hop-by-hop basis along the path. This allows identifying high-latency hops in the path.

- Traceroute: Traceroute maps the journey of packets from source to destination by listing all routers along the path. It prints the hop-by-hop latency for each intermediate router in the path between a source and target server. This reveals the contribution of latency by different infrastructure segments.

The goal is to measure different contributors end-to-end to identity optimization areas for lowering system latency. Both quantitative metrics and user-centric quality attributes are considered for latency focused systems.

For example, let’s say we have a simple web application with the following components:

- User’s browser

- Internet connection (e.g. home broadband)

- Routing from ISP to data center

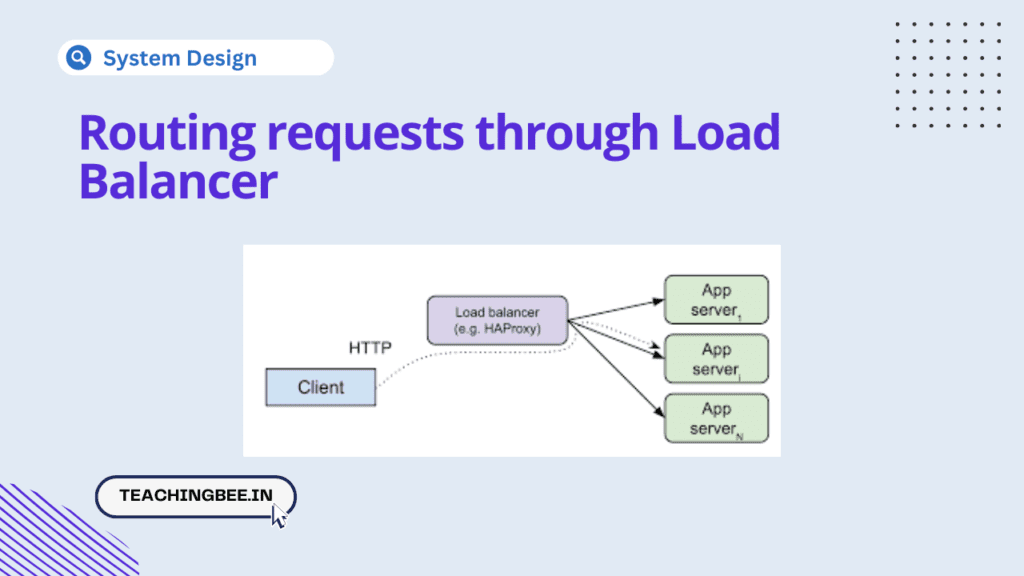

- Load balancer

- Web server

- Database query

- Web server response

- Routing back to user

We can assign estimated latency values to each hop:

- User’s browser: 50 ms

- Home broadband connection: 20 ms

- Routing to data center: 100 ms

- Load balancer: 10 ms

- Web server processing: 100 ms

- Database query: 50 ms

- Web server response: 20 ms

- Routing back to user: 100 ms

Adding these up gives us a total roundtrip latency of 450 ms.

So in this example, it would take approximately 450 ms from when the user clicks something to when they receive the response back from the server. This doesn’t include the time to actually generate and transmit the response, which would add even more latency.

How to Optimise Latency in System Design?

Some key ways to reduce latency in system design are:

Optimize Network Pathways

Choose optimal network connectivity and architecture between client, app servers and backend databases to reduce network hops. Architect systems to minimize network hops and long distance traffic flow between end users, edge servers, application servers and databases to reduce network latency.

By carefully selecting cloud computing regions and zones closest to your user base, reduces geographic latency.Another way is to use dedicated high-throughput links between data centers to keep inter-data centre latency low.

Implement networking devices like load balancers between components to optimize traffic routing. Leverage CDNs (Content Delivery Networks) to cache and distribute content closer to end users.

Scale Compute Resources

Horizontally scale application servers to handle increasing loads. Auto scaling groups can spin up VMs/containers based on load. Also, distribute processing across many small/medium application instances rather than few large ones.

Vertical scale up by upgrading CPU, memory, storage of servers to improve capacity also reduces latency by reducing processing speed of query.

Code Optimization and Async Processing

Use asynchronous, non-blocking I/O operations and parallel processing instead of synchronous blocking calls. This will prevent from one process hogging the entire server resources. Optimizing database usage with efficient table structures, indexes, queries can reduce time to query the database thus reducing the latency.

Make use of caching libraries like memcached to cache the frequently used results to reduce database load. Also, optimizing logic flows to prevent delays, use worker pattern for slow operations can help reducing the latency in system design.

Compression and Caching

Enable gzip or brotli compression on web servers to send smaller assets over the network faster.Implement application caches using Redis/Memcache to reuse data instead of expensive DB queries.

Use local SSDs for databases instead of EBS/network storage due to higher IOPS.Cachingg common requests, computation results that can be reused instead of repeating processing helps in reducing DB load and query time and reducing latency.

Why latency is important in System Design?

- User Experience: Lower latency results in faster response times and improved user experience. High latency leads to laggy, unusable applications.

- Business Metrics: Latency impacts key business metrics like conversion rates, customer satisfaction scores and revenue.

- Scalability: Systems designed for low latency tend to scale better for higher loads and distributed deployments.

- Fault Tolerance: Low latency architectures often have improved resilience by preventing widespread failures through isolation.

Read Also

FAQ

Why does latency vary across different devices and applications?

Latency discrepancies arise from diverse hardware capabilities, network conditions, and software optimizations. Understanding these variables is essential for tailoring system designs to deliver consistent performance across a range of environments.

Can quantum computing reduce latency significantly?

Quantum computing, with its unique computational principles, holds the potential to revolutionize system performance. Exploring the integration of quantum technologies may offer groundbreaking solutions to minimize latency in complex computational tasks.

How does latency impact user perception in virtual reality applications?

In virtual reality (VR), latency directly influences the sense of immersion. Achieving low latency is critical to preventing motion sickness and providing users with a seamless and realistic virtual experience, making it a pivotal consideration in VR system design.